video log

Haegg / haegg group

Home

Dev / Log

Investor relations

Partnerships & Access

The Path to MSP and The First Customer

November (#3) 2025

Are your reps setting the time, and wrapping up the call properly?

Despite the last post, I've been thinking about what "MVP" actually means for Calleoke. Not minimum viable — minimum sellable. What's the smallest thing someone would pay for?

My first instinct: build the teleprompter. Real-time coaching, karaoke-ball tracking, suggestions that evolve as confidence grows. The full vision.

But that's a lot of complex moving parts. And complex means slow. Slow means no customers. No customers means no feedback. No feedback means building blind.

So I asked a different question: what's the core value, stripped to its simplest form?

The Insight

The teleprompter tells you what to say. But before that matters, there's something simpler:

Did you hit the key objectives?

Every sales call has moments that matter:

- Did you confirm the duration of todays meeting?

- Did you agree on next steps in the call?

- Did you book the next meeting, send the invite and confirm receipt?

Miss one of these and the deal stalls. Not because you said the wrong thing — because you forgot or didn’t have time to say the right thing.

The MVP

So the MVP isn't the teleprompter. It's the objectives tracker.

Listen to the call. Track what matters. Alert when something critical is about to be missed.

"You're 20 minutes in and haven't confirmed next steps."

That's it. Simple. Concrete. Valuable today.

Why This First

- It's the foundation anyway — You need to understand what happened before you can suggest what to say next.

- Faster to build — No complex real-time UI choreography. Just listen, track, nudge.

- Easier to sell — "Never forget to book the next meeting" is a problem people know they have.

The teleprompter is where I'm going. The objectives tracker is how I get the first check.

Next

Find the right pilot customer. Someone with real calls, real objectives, real stakes. Their process becomes the blueprint. We build it together.

If that sounds like you — let's talk.

Currently Implementing - Core Philosophies

November (#2) 2025

I've been heads-down on what makes real-time coaching actually feel right. Not just "AI that helps with sales calls" — that's too vague. I needed principles that guide every design decision.

Here's what I landed on:

Partner Not Supervisor

↗

Rep goes off-script

Calleoke notices

↻

Adapts direction

✓

New suggestion

corrects you

adapts with you

You took things somewhere unexpected? Here's help for wherever you ended up

1. Partner, Not Supervisor

Most tools feel like a manager watching over your shoulder. Calleoke should feel like a brilliant colleague in your ear.

When you go off-script — and you will, you're in the conversation, the AI isn't — Calleoke doesn't correct you. It adapts. You took things somewhere unexpected? Here's help for wherever you ended up.

No "you should have said X" energy. Just collaboration.

The same coaching works for everyone. Experienced reps glance and riff. Newer reps follow more closely. One product, used differently.

Thinking Out Loud

"Hmm..."

"Sounds like pricing..."

"Price concern"

"Here's how to respond"

20%

40%

0%

60%

80%

100%

AI confidence

customer finishes

customer starts speaking

Calleoke shows its thinking as it develops — not a loading spinner, a window into reasoning

2. Thinking Out Loud

Most AI: wait for input → process → show answer. You see "thinking..." then suddenly a result. Feels slow and dumb, even when it's fast.

Calleoke shows its thinking as it develops. Customer starts talking, you see: "Hmm, sounds like a concern..." Customer continues: "Looks like pricing..." Customer finishes: "Price objection — here's how to show ROI."

Confidence grows visibly. Not a loading spinner. A window into Calleoke's mind working alongside yours.

3. Tracking, Not Blocking

When you're delivering lines, the teleprompter should feel like a karaoke ball — alive and tracking with you, not frozen and waiting.

Lines light up as you speak. They fade when done. Next line promotes smoothly. If new suggestions need to appear, they queue up and arrive at natural pauses, not mid-sentence.

The whole thing breathes.

The Test

Every UI decision gets checked against these three. Does it feel collaborative? Does it show thinking? Does it track without blocking?

Yes? → Ship it.

No? → Rethink.Hit me up if you want to see the latest build 🔭

1

2

3

4

5

← script lines

delivering

rep speaking line 3

promotes

line complete, next rises

ready

smooth handoff

Analogous to a karaoke ball — alive and tracking with you, not frozen and waiting

Tracking Not Blocking

Developer Rhythm / Ongoing Operations

November (#1) 2025

System Report:

→ Development cadence steady.

→ All major modules in iterative refinement cycle.

→ Back-end linking scheduled for next cycle.

Directive:

→ Continue tuning until flow achieves production speed and precision.

→ Await new test feedback.

MARKETING:

→ Visual assets conceptualised and produced

→ First promo conceptualised and produced

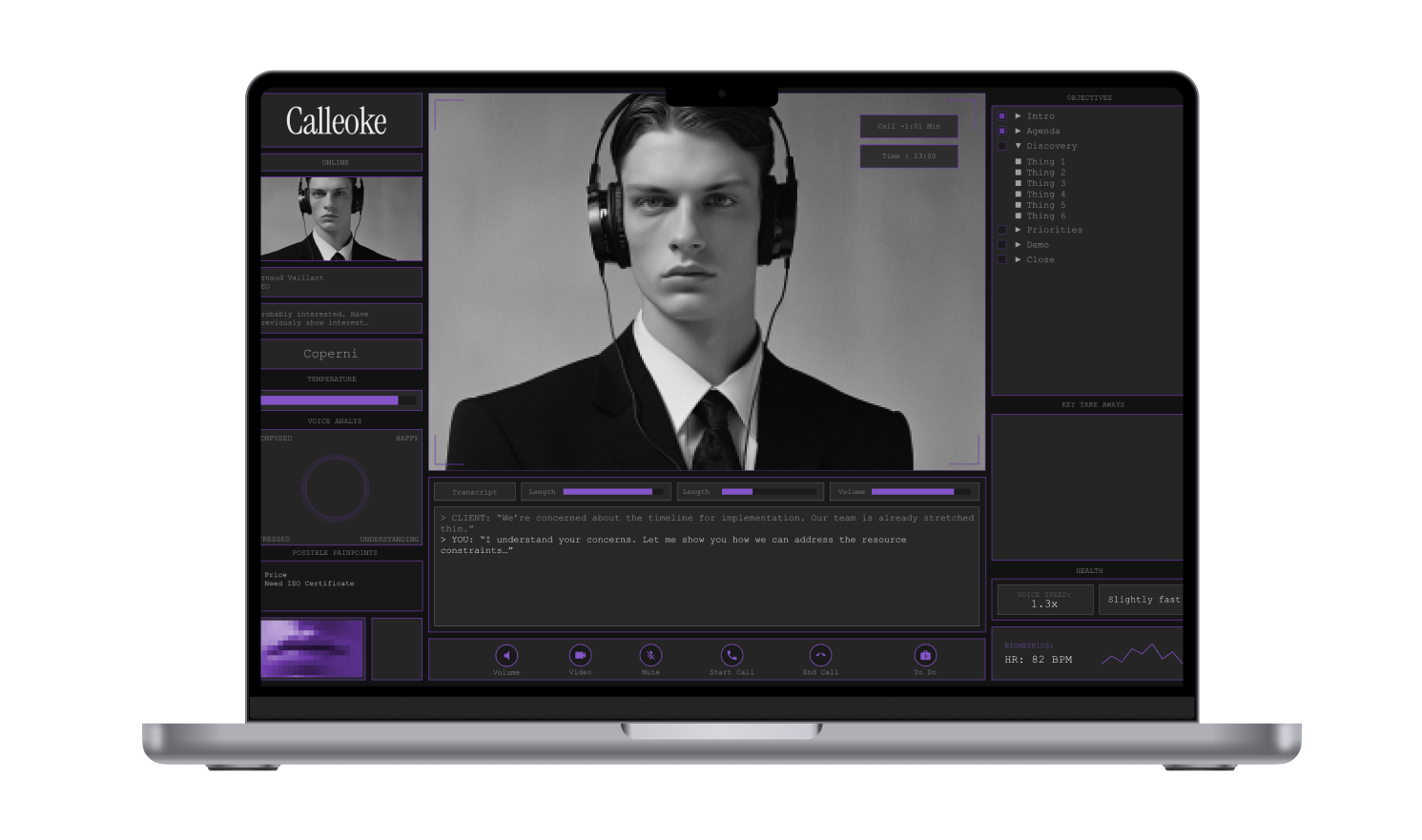

Front-End Prototype

November (#1) 2025

Summary:

→ Working on front-end behaviour and interaction logic.

→ Demonstration video recorded to show customer scenarios and user flow.

Purpose:

→ Pre-recorded prototype for visualising user interactions.

Next Steps:

→ Connect front-end with back-end modules (usable product expected for constrained cases).

→ Refine and tune model timings for improved performance.

→ Begin cinematic promo material production.

Progress Rhythm:

→ Development ongoing daily alongside other obligations.

→ Consistent progress observed.

Status:

→ Integration: PENDING 🔧

→ Model Refinement: ACTIVE 📡

→ Promo Assets: IN PRODUCTION 🔩

MCP (Minimum Conceptual Product) Launch

September 2025

Purpose:

→ Release intended to showcase the concept and technical foundation.

→ Not yet designed for production use.

Known Issues:

→ Transcript echo (customer/rep): temporary feedback issue, resolved with headphones.

→ Speed & timing: slow; tuning required.

→ UI refinement: incomplete; next iteration scheduled.

→ Quality of line generation: below target; additional model layering planned.

Technical Notes:

→ Using lightweight models for demo performance.

→ Integration tests with Apple’s latest models completed.

Plan:

→ Multi-model intelligence and timing refinement in upcoming release.

First Working Prototype

August 2025

Build Summary:

→ Real-time transcription online ☑

→ Speaker identification operational ☑

→ Dynamic prompting in progress ⚙️

Next:

→ Beta testers required for early validation.

→ Preparing demonstration for public review.

Status:

→ Prototype: FUNCTIONAL (Early) 🛠️

→ Latency: WITHIN DEMO RANGE ⚖️

→ UI: BASIC STRUCTURE 🐁

Acceleration Phase / Solo Build Initiated

Early 2025

Observation:

→ AI tooling ecosystem expanded.

→ Adjacent solutions confirmed timing and feasibility.

Decision:

→ Ceased search for technical cofounder.

→ Development initiated independently using vibe-coding and no-code frameworks.

Status:

→ Readiness: CONFIRMED 📡

→ Toolchain: STABLE ⛓️

→ Morale: HIGH 🔭

To Boldy Sell, Like No Man Sold Before

December 2023

Context:

→ Armed with a slide deck and frustration, the vision was pitched to technical founders.

→ Discussions promising — no actual path to build emerged.

Outcome:

→ Idea validated; execution unavailable. 🔭

Status:

→ Vision: ACTIVE 🔮

→ Technical Partnership: INCOMPLETE 🖇️

→ Resources: MANUAL BUILD INITIATED 🎛️